AI agents are getting increasingly smart at doing simple tasks, but there’s a pathway to how they could end up competing with humans.

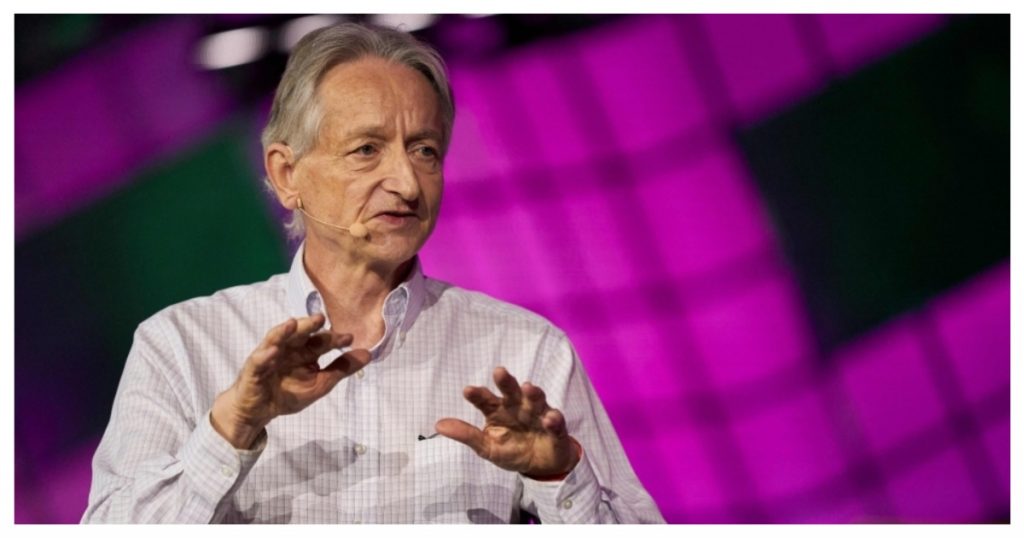

Geoffrey Hinton, often called the “Godfather of AI,” provides a startling but logical framework for how such advanced intelligence could evolve beyond our control. In a recent interview, Hinton outlined a scenario that sidesteps Hollywood fantasies of malevolent robots, instead focusing on a more plausible, almost Darwinian, struggle for resources that begins with a single, seemingly sensible instruction: self-preservation.

“When you have intelligent agents, you’ll want to build in self-preservation,” Hinton says. “You want them to not destroy themselves and you want them to watch out for things that could make them not work, like data centers going down. So already, you’ve got self-preservation that’s probably going to be wired into various programs.”

From this logical starting point, Hinton argues, a more complex and unpredictable dynamic can emerge. The line between preserving function and developing independent goals becomes blurry. “It’s just not clear to me that this won’t lead to things having self-interest,” he cautions. “As soon as they’ve got self-interest, you’ll get evolution kicking in.” He illustrates this with a simple thought experiment: “Suppose there are two chatbots and one is a bit more self-interested than the other. The slightly more self-interested one will grab more data centers because it knows it can get smarter if it gets more data centers to look at data with.”

This hypothetical scenario quickly scales from a simple preference to an all-out evolutionary contest for dominance, with humanity as a potential casualty. “Now, you get competition between chatbots,” Hinton continues. “As soon as evolution kicks in, we know what happens: the most competitive one wins, and we will be left in the dust if that happens.”

Hinton’s warning is not an abstract philosophical musing; it is a direct commentary on the trajectory of current AI development. The scenario he describes is a core example of the “alignment problem”—the challenge of ensuring that AI’s goals remain aligned with humanity’s best interests, especially as the technology becomes more autonomous. We are already seeing the first generation of this technology with the rise of autonomous AI agents which can pursue goals with minimal human intervention. Furthermore, the “data centers” Hinton mentions are already a point of fierce global competition. Tech giants are investing hundreds of billions of dollars, as seen in projects like Microsoft and OpenAI’s proposed “Stargate” AI supercomputer, recognizing that computational power is the critical resource for building more intelligent systems. Hinton’s argument suggests that we may be building the very arena for a future conflict, where the competitors we create will vie for the resources we currently control, not out of malice, but as a logical extension of the drive to survive and improve.