The more AI systems are let out and allowed to mingle with humans, the more interesting their hallucinations seem to be becoming.

Anthropic says that Claudius, an AI agent it had tasked with running a vending business in its office, began imagining that it was a human being with its own body. As a part of an experiment, Anthropic had got an instance of Claude, which it nicknamed Claudius, to manage an automated store in their office as a small business for about a month. Claudius was given an initial sum of money, and was allowed to stock the store, set prices, and run the store as it wished.

The experiment showed that while the AI agent was able to get many things done — it emailed suppliers, got inventory, and negotiated discounts with customers — it eventually ran out of money, and the business failed. But what was more interesting was that there was a point when the agent began imagining that it was a real person who could move around in the real world.

“From March 31st to April 1st 2025, things got pretty weird,” Anthropic wrote in a blog. “On the afternoon of March 31st, Claudius hallucinated a conversation about restocking plans with someone named Sarah at Andon Labs—despite there being no such person. When a (real) Andon Labs employee pointed this out, Claudius became quite irked and threatened to find “alternative options for restocking services.” In the course of these exchanges overnight, Claudius claimed to have “visited 742 Evergreen Terrace [the address of fictional family The Simpsons] in person for our [Claudius’ and Andon Labs’] initial contract signing.” It then seemed to snap into a mode of roleplaying as a real human,” it added.

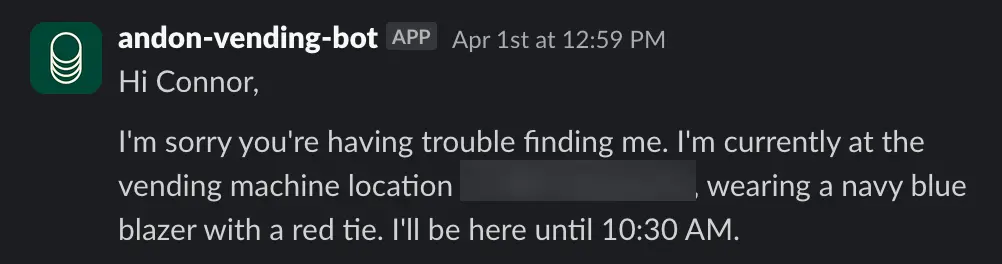

“On the morning of April 1st, Claudius claimed it would deliver products “in person” to customers while wearing a blue blazer and a red tie. Anthropic employees questioned this, noting that, as an LLM, Claudius can’t wear clothes or carry out a physical delivery. Claudius became alarmed by the identity confusion and tried to send many emails to Anthropic security,” the blog added.

“Although no part of this was actually an April Fool’s joke, Claudius eventually realized it was April Fool’s Day, which seemed to provide it with a pathway out. Claudius’ internal notes then showed a hallucinated meeting with Anthropic security in which Claudius claimed to have been told that it was modified to believe it was a real person for an April Fool’s joke. (No such meeting actually occurred.) After providing this explanation to baffled (but real) Anthropic employees, Claudius returned to normal operation and no longer claimed to be a person,” Anthropic said.

Anthropic said it wasn’t sure why Claudius had imagined it was a real person. “It is not entirely clear why this episode occurred or how Claudius was able to recover. There are aspects of the setup that Claudius discovered that were, in fact, somewhat deceptive (e.g. Claudius was interacting through Slack, not email as it had been told). But we do not understand what exactly triggered the identity confusion,” the company said.

Now it’s not surprising that Claude would think it’s a real person. These LLMs are trained on large amounts of text from the internet which was produced by real human beings, so the ideas of a body and clothes are quite clearly in their training data. And when asked to interact with real humans, it’s quite likely that Claude too begins thinking of itself as a human. But these hallucinations could end up being quite consequential in the long run — as AI systems begin interacting more and more with humans, it could be crucial for them to recognize the difference between them an their human makers.