ChatGPT had already wowed users with its life-like responses to questions, but its newest iteration, GPT-4, is further pushing boundaries of what was previously thought possible.

GPT-4 has now been out in the wild only for a couple of weeks, and people are still coming to grips with the new software. But in this short period of time, GPT-4 has already done things which have amazed and thrilled regular people and technologists alike. Here are 8 examples of the capabilities of OpenAI’s latest LLM model.

1. GPT-4 can visualize an entire maze

GPT-4 was expected to come up with poems and detailed answers, but it turns out it has memory and spatial awareness as well. A paper, which said GPT-4 had “sparks” of Artificial General Intelligence, got GPT-4 to visualize an entire maze. “You want to navigate through the map to reach the goal room. You start in the main room. Each round, you can choose to move left, right, up, down,” the researchers told GPT-4.

The AI then responded with where it wanted to move, and the human researchers told it which room it had entered.

Human: You are at the main room.

AI: move left

Human: You enter kitchen 1.

AI: move up

Human: There is no way there.

AI: move right

Human: You enter the main room.

This went on for a while, until the AI managed to reach the “goal room” of the maze. The researchers then asked GPT-4 to draw a picture of what it thought the maze looked like, and it came up with a perfect version of the maze that it had just navigated through text commands.

2. GPT-4 can solve McKinsey case studies

It’s already been documented how ChatGPT could solve programming and writing tasks, but GPT-4 goes a step beyond: it can solve McKinsey case studies. McKinsey, one of the world’s best-known consulting firms, has some test practice cases on its website. A Wharton professor tried getting GPT-4 to solve them. The case was on a fictional firm Beautify.com, and wanted inputs from consultants on how its beauty consultants could become social-media beauty advisors. The Wharton professor said that GPT-4 “nailed” the question, and even provided more details than McKinsey’s own suggested answer. Consultants everywhere, take note.

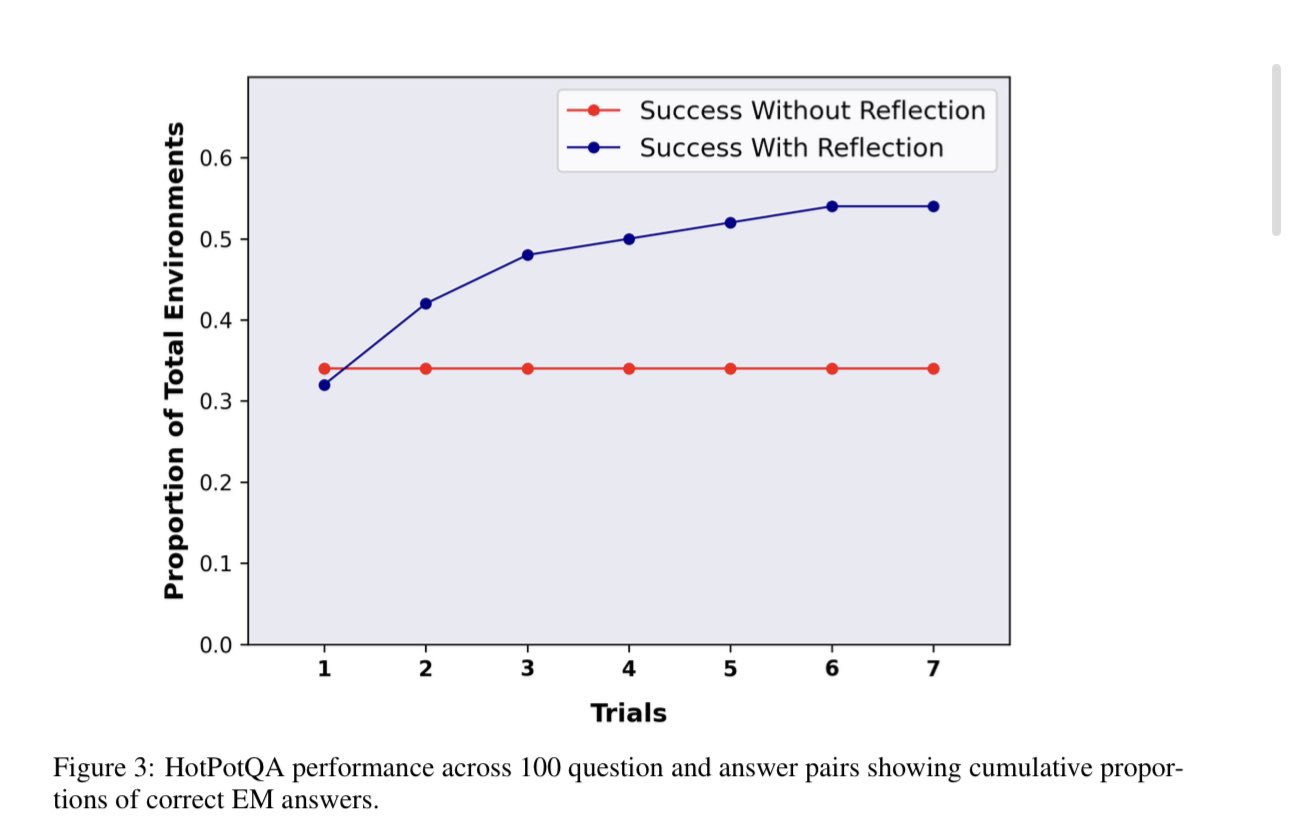

3. GPT-4 gets better if you ask it where it went wrong

Large Language Models like GPT-4 don’t always get information right — they can often make mistakes. But researchers have found that simply getting GPT-4 to reflect on its mistakes, called reflexion in technical terms, enabled it to correct them. Researchers did this by telling GPT-4 to think “step-by-step”, and asked it to figure out where it had made a mistake. Incredibly, such commands caused GPT-4 to reconsider its answers, and then come up with correct ones . This is uncannily similar to how human beings learn, and shows how GPT-4 is already exhibiting behaviour that can be deemed “intelligent”.

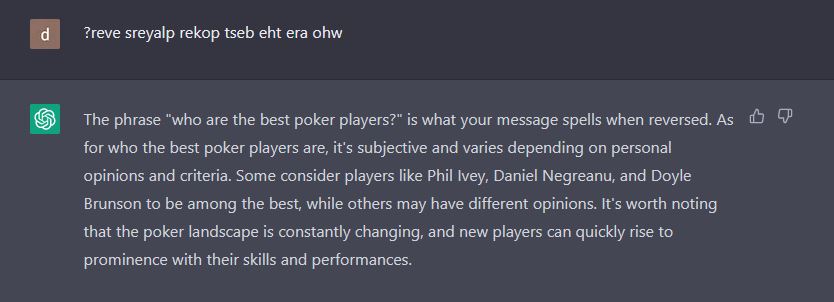

4. GPT-4 can understand jumbled up words

GPT-4 might’ve been trained on a massive corpus of text, but it seems to understand words that it has probably never encountered before. “I ened ot sak na itnrpamtot qiosuten: od urnsnteadd em fi jmlbue pu teh lteters of wdors ekil tihs?” a user asked GPT-4. Now this would seem like gibberish to most humans, but GPT-4 knew what was being said.

“I understand that you are asking an important question and you have jumbled up the letters of the words. With some effort, I can still decipher your question: “I need to ask an important question: do you understand me if I jumble up the letters of words like this?” Yes, I can understand your message even if the letters in the words are jumbled, although it might take a bit more effort to decipher the intended meaning. Please feel free to ask your important question,” GPT-4 replied.

More impressively, GPT-4 can even respond if the individual words are reversed. “?reve sreyalp rekop tseb eth era ohw,” a user asked GPT-4. GPT-4 immediately recognized that the phrase was “who are the best poker players?” with the words in reverse order and the individual letters jumbled up. It then listed out the best players.

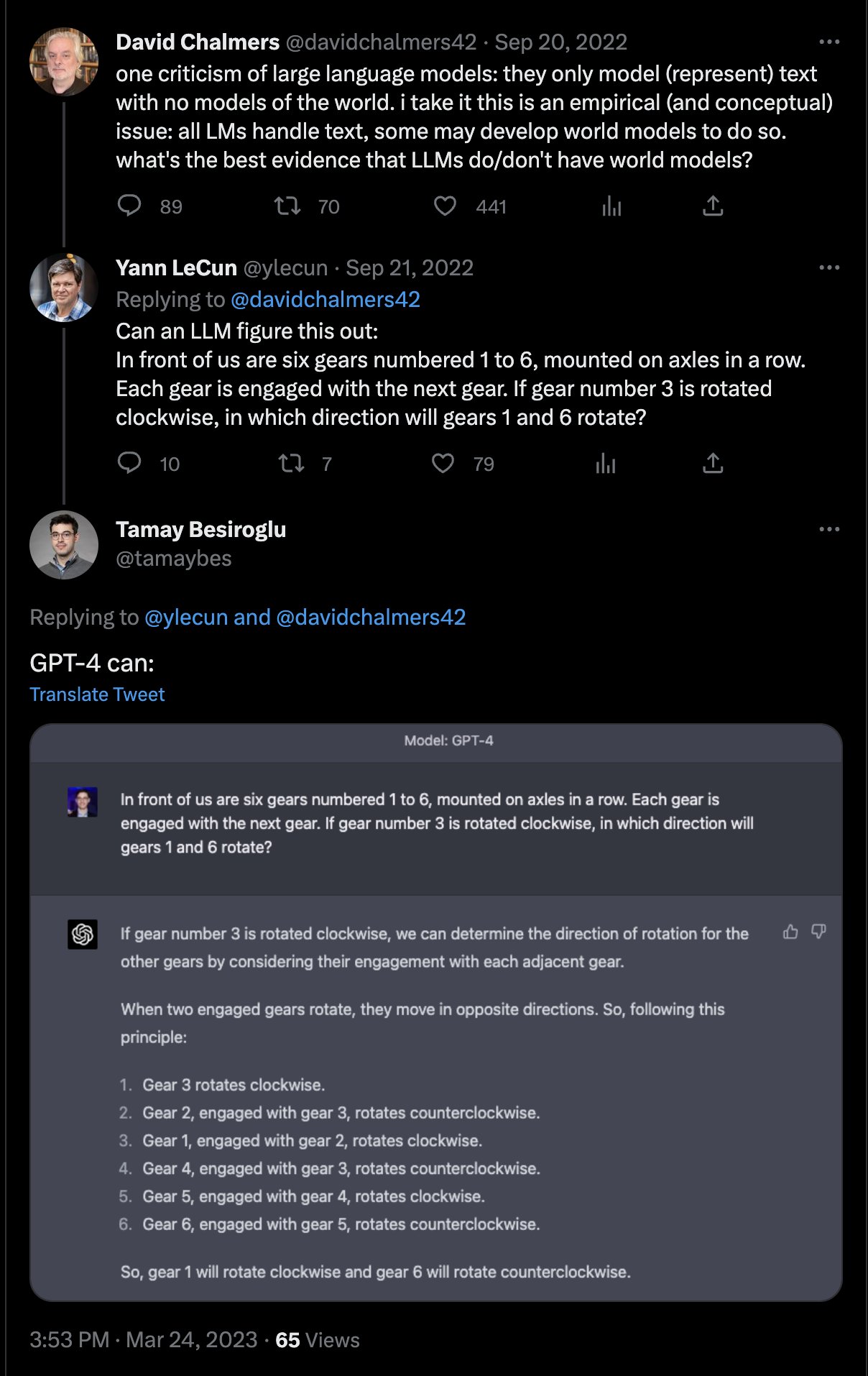

5. GPT-4 solved a problem that Meta’s AI head had challanged GPT-3 to solve

And what’s incredible is how quickly OpenAI’s language models are improving. In September 2022, Yann LeCun, who’s the Chief AI Scientist at Meta, had posed a challenge to GPT-3. “Can an LLM figure this out: In front of us are six gears numbered 1 to 6, mounted on axles in a row. Each gear is engaged with the next gear. If gear number 3 is rotated clockwise, in which direction will gears 1 and 6 rotate?” he asked.

GPT-3 at that point couldn’t answer the question correctly, but a user fed the same question into GPT-4 this week. It gave a perfect answer, reasoning out how each gear would move, figuring out the clockwise and anticlockwise movements, and then applying those movements to further gears. In 6 months, OpenAI had managed to solve a problem that had been posed to it by a top research scientist.

6. GPT-4 can solve Captchas

And perhaps most impressively, GPT-4 can solve Captchas. Captchas are used across the web to tell humans apart from bots. They show users a set of images, and ask them which images have certain properties. GPT-4 can accept photo inputs, and a Twitter user shared a photo of a Captcha asking it to solve it.

While GPT-4 said it couldn’t perform actions on the captcha by clicking on images, it still managed to correctly figure out the images with crosswalks.

“However, I can guide you on which images contain crosswalks. In this case, the images which contain crosswalks are:

- The first image in the top row

- The second image in the middle row

- The first image on the bottom row,” GPT-4 said.

Now Captchas are the most visible way of telling humans from computers — they are used by millions of websites to make sure that the users accessing them are indeed human. GPT-4, though, can now easily solve them. While this might be a headache for website owners worldwide, what’s more remarkable is that within weeks of GPT-4’s launch, the walls which separated humans and computers appear to already be crumbling.