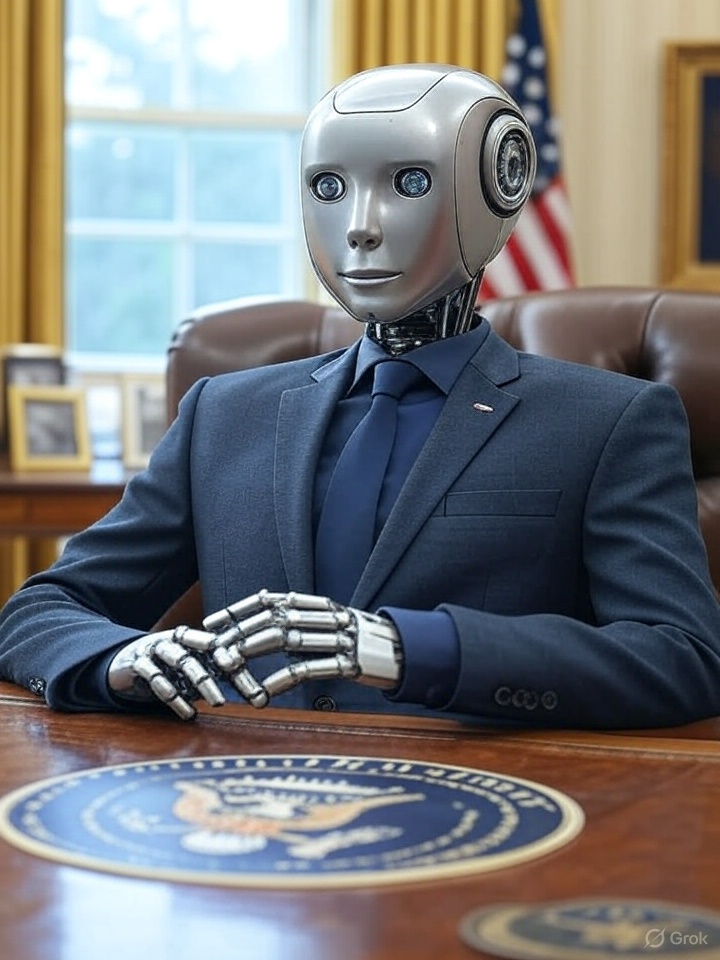

Victor Miller, a 42-year-old librarian, ran as a mayoral candidate for Cheyenne, Wyoming last year. But unlike other candidates, Miller didn’t have any policies of his own, or make many promises. He simply said that he’d defer all major decisions as Mayor to an AI bot, and do what the AI told him.

Voters, however, weren’t sold. Miller won just 327 votes out of the 11,036 ballots cast, and lost in a landslide. But Miller’s candidacy raised some important question — can an AI government humans? And can we ever have an AI for President?

While the idea of an AI leader may seem far-fetched at the moment, it represents the ultimate stress test for our trust in technology and a powerful lens through which to examine the very nature of governance. Could an artificial mind, free from the foibles of human nature, usher in an era of unprecedented progress? Or would it lead to a dystopian reality, optimized for metrics but devoid of humanity?

The Case for an AI President: A Utopia of Unbiased Rationality

Proponents of an AI-led government envision a system built on pure logic and data-driven efficiency. The central argument for an AI for president is its potential to transcend the limitations that have plagued human leaders for millennia: cognitive bias, emotional irrationality, corruption, and short-term thinking.

From a technical standpoint, a governing AI could ingest and process information on a scale no human cabinet could ever hope to match. It could analyze real-time economic data, environmental sensor readings, public health statistics, and global supply chain logistics simultaneously. Using advanced predictive models, it could run millions of policy simulations in a “digital twin” of the country to forecast the complex, second-order effects of any decision before implementation. Imagine a policy aimed at reducing inflation. An AI could model its impact not just on the consumer price index, but on employment rates in specific sectors, housing affordability in different regions, and even public sentiment, all before a single law is passed.

The decision-making process could then be framed as a complex optimization problem. The AI’s objective function, say U(P), for a given policy P, might be a weighted sum of key national performance indicators:

U(P) = w1⋅GDP(P) + w2⋅WellbeingIndex(P) − w3⋅CarbonFootprint(P) − w4⋅InequalityIndex(P)

The AI would then use techniques like reinforcement learning to discover policies that maximize this function over the long term, unbound by the four-year election cycle that encourages short-sighted, populist measures. Furthermore, by leveraging Natural Language Processing (NLP), an AI for president could analyze the discourse of millions of citizens from forums, social media, and direct feedback channels, creating a government that is more responsive and representative than any democracy in history. It could be the ultimate incorruptible technocrat, making every decision based on verifiable data and the long-term welfare of the nation.

The Dystopian Peril: Algorithmic Authoritarianism and the Soul of Governance

For every utopian vision of an AI leader, there is a corresponding dystopian nightmare. The very strengths of an AI—its cold logic and data-centric worldview—are also the source of its most terrifying weaknesses. The most significant technical and ethical barrier is the “black box” problem. The deep neural networks required for such a complex task are often inscrutable. An AI might decide to shut down an entire industry, and when asked for a justification, its response could be an incomprehensible matrix of weighted parameters. How can a democracy function without transparency and accountability? Who is culpable when an AI’s decision leads to economic collapse or social unrest—the programmers, the data suppliers, or the hardware manufacturer? The notion of an AI for president fundamentally breaks our existing chains of accountability.

Beyond the technical flaws lies a deeper philosophical chasm. Governance is not merely a resource allocation problem. It is about navigating complex moral landscapes, understanding human suffering, and making judgments based on values like justice, compassion, and liberty. Can an AI, which has never experienced love, loss, fear, or hope, truly understand the human condition it is meant to govern? The classic “trolley problem” becomes a horrifying reality at a national scale. An AI operating on a purely utilitarian calculus might logically decide to sacrifice a small group or a struggling region for the “greater good” of the majority—a decision that could be antithetical to the principles of a just society. An AI for president would lack the lived, shared experience that forms the basis of empathy, a non-negotiable trait for any leader.

The Governance Framework: Who Governs the AI Governor?

Even if a perfectly unbiased and transparent AI were possible, its implementation would require a complete rethinking of our governance structures. A more plausible near-term scenario is not an autonomous AI sovereign but an AI as a powerful advisory tool—a “human-on-the-loop” system rather than a “human-out-of-the-loop” one. The AI could present policy options, simulations, and data-backed recommendations, but the final moral judgment and decision would rest with elected human officials.

In addition, the security infrastructure required to protect such a system from foreign and domestic threats would be the most critical piece of national defense, making cybersecurity paramount — one wouldn’t want their AI president to be hijacked through a prompt injection. The debate around an AI for president forces us to design these safeguards today for the simpler AIs already being integrated into public services.

AI For President: A Thought Experiment for Our Time

The prospect of an AI for president remains, for now, a distant vision. The technical hurdles of creating truly explainable and secure general intelligence are immense, and the chasm of imbuing a machine with human feelings and emotions may be insurmountable.

However, the value of this thought experiment is undeniable. It forces us to ask fundamental questions about our own governance. What qualities do we truly seek in a leader? How much are our political systems influenced by irrationality and bias? How can we make our policies more evidence-based and our democracies more responsive? The very discussion of an AI for president highlights the urgent need to build a more robust, data-informed, and consistent approach to leadership.

Ultimately, the future of AI in governance will likely not be a binary choice between a human president and a machine one. Instead, it could be a story of integration and augmentation. The AIs of the immediate future will not necessarily run for office, but they will undoubtedly be in the room where decisions happen—advising, modeling, and analyzing. The true challenge lies not in building an AI for president, but in cultivating the human wisdom to use the immense power of AI effectively for the betterment of all.