The rise of AI might seem very different and novel, but it has some stark parallels with a paradigm we’ve been used to for a long time.

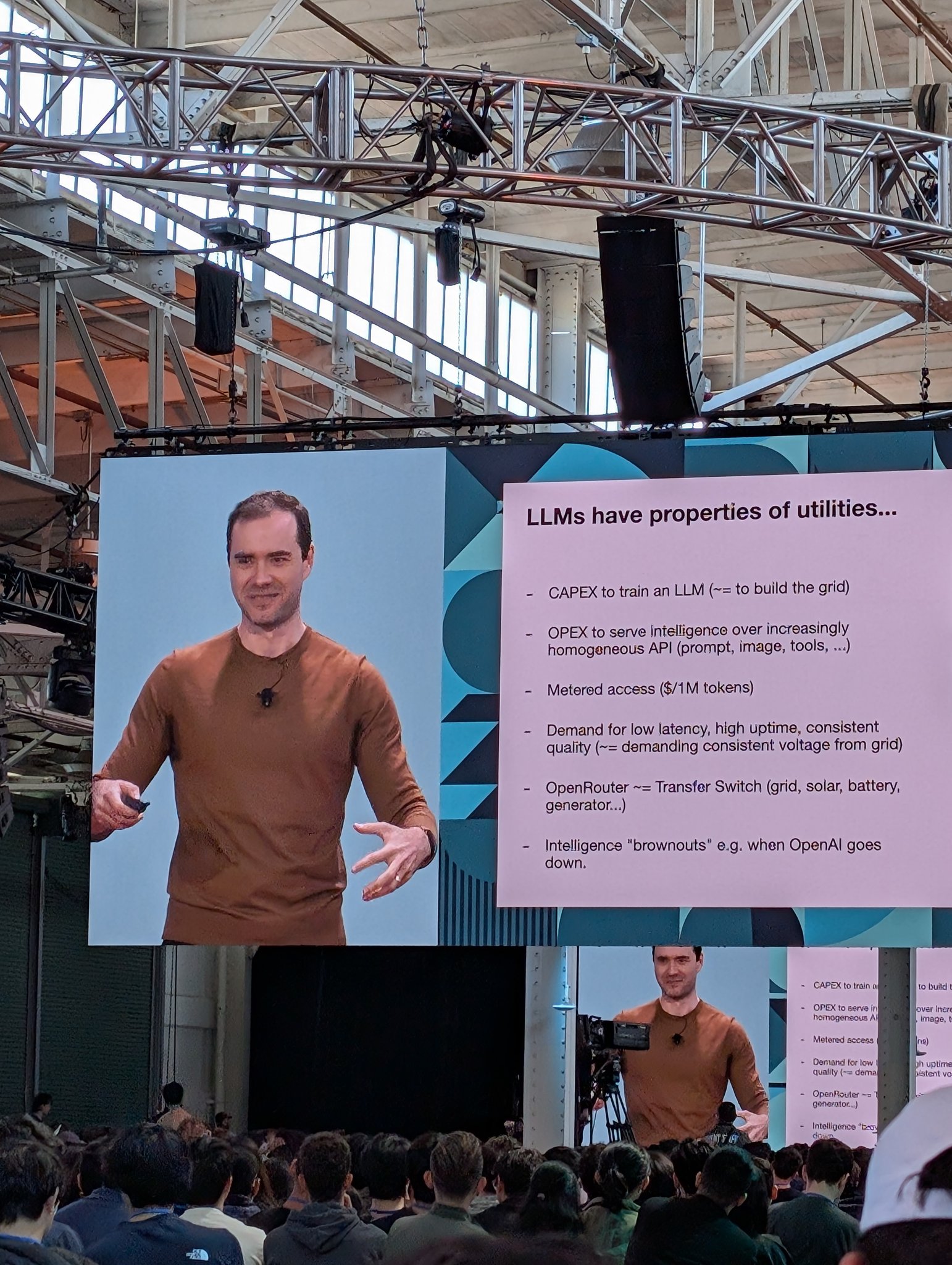

Andrej Karpathy, the former Director of AI at Tesla, has said that modern LLMs have properties of public utilities like electricity. He made the comparison while speaking at the Y-Combinator AI Startup School.

“LLMs have the properties of utilities,” one of his slides said. Karpathy argued that like it was necessary to have capital expenditure to set up an electricity grid, a similar sort of capex was required to train an LLM. The training of modern LLMs needs hundreds of thousands of GPUs housed in large datacenters, and it can take a massive upfront investment to create an LLM from scratch.

But the comparison doesn’t stop there. Karpathy says that there are also operational expenses in putting LLMs in the hands of people, just like with electricity. In addition, LLMs are becoming homogeneous through the use of APIs, and electricity too is homogenous — the same electricity can power your toaster, and also run your car.

Like electricity, Karpathy says, people are given access to LLMs through metered access — the charge for electricity depends on the amount users consume, and similarly, people are charged for LLMs depending on their use, typically something like $1 per million tokens.

Apart from these similarities, companies had been popping up that make the parallels even more compelling. Like OpenRouter lets users choose between different LLMs, allowing them to seamlessly switch between say offerings from Google, OpenAI and Anthropic, the electrical grid is also set up in a way that seamlessly switches between electricity generated through the the conventional grid, solar power, or batteries.

And just like with electricity, there are occasional downtimes with LLM usage as well. Just recently, ChatGPT had suffered a major outage, and Google and Anthropic too have been through similar outages, or suffered downtime because of hitting capacity limits.

The comparison between electricity and modern AI through LLMs is clarifying and persuasive, and one of the many that Karpathy seems to regularly come up with, having previously compared different parts of LLM training with a textbook, and made several observations on the current state of AI. But the latest comparison with electricity can lead to some actionable insights. Like electricity pervades modern life, LLMs might similarly end up being used in many different aspects of how we live and play. Over time, intelligence could become commonplace, and it lead to a mushrooming of devices and situations where it can be applied in. And like electricity, the economic gains from this new technology might not necessarily lie with power generation companies — they could end up with companies that use this intelligence to power their goods and services.