Machine learning algorithms can often require large data sets to work with, but Facebook’s approach to categorizing hate speech might be a little unusual.

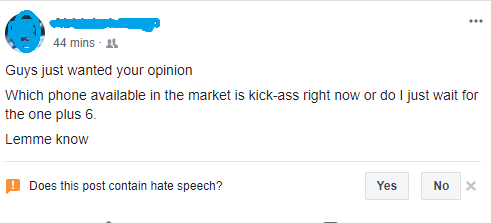

As of late evening on 1st May, Facebook seems to be asking users after every post if the content that they see is hate speech. “Is this post hate speech?,” asks a bubble at the end of all kinds of posts on Facebook. Incredibly, the update doesn’t seem to be triggered by specific keywords, but seems to appear on completely innocuous posts.

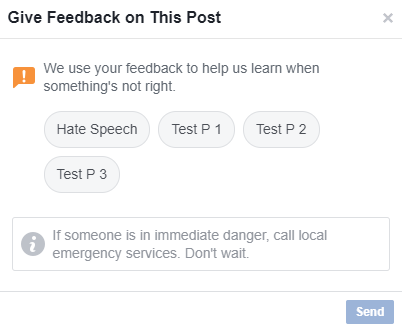

On pressing “No,” the little widget disappears. But if users press “Yes”, Facebook throws up a new dialog box, asking users to give additional feedback. The options are Hate Speech, and more bizarrely, Test P1, Test P2 and Test P3.

If users press “Hate Speech,” Facebook thanks them for their feedback. The same result occurs if users press any of Test P 1, Test P 2 and Test P3.

Given how the Hate Speech widget was rolled out to users worldwide, there was an instant reaction on social media.

Is this happening to anyone else? Every post on Facebook asks ‘Does this post contain hate speech? Yes No’

— Colin Chisholm (@ColinHantsCo) May 1, 2018

People couldn’t believe that a company like Facebook, which is a tech company that constantly touts its AI expertise, could ask people if clearly harmless posts were Hate Speech.

Facebook — an industry leader in Artificial Intelligence who thinks that AI is going to solve all of its problems — is asking me if this post contains hate speech pic.twitter.com/4MqrGw8PFj

— brian feldman (@bafeldman) May 1, 2018

Soon memes mocking Facebook’s AI prowess began being shared.

everyone: yo facebook your company has an issue with hate speech

facebook: our top engineers are designing algorithms to solve this problem

facebook: pic.twitter.com/Gn9QMCqRfF

— Produced by Philip M. Jamesson (@PhilJamesson) May 1, 2018

What made things worse was that Facebook was asking users to tell it if their own posts were hate speech.

This is weird.

Facebook is asking me about whether *my own post* on Facebook contains hate speech.

And also, the post is just a NYT story about restaurant trends in Austin, to which I've added no commentary. pic.twitter.com/ZIrUW4IW4q

— Joe Weisenthal (@TheStalwart) May 1, 2018

No. My sandwich news does not, in fact, contain hate speech, Facebook. pic.twitter.com/0VWo9Z21yX

— Adam Rawnsley (@arawnsley) May 1, 2018

Some people didn’t realize that Facebook was showing this option for all posts, and began wondering if they were being selectively targeted.

Facebook is censoring my account. Asking if my post contains "Hate Speech"

Give me a break. Opposing Radical Islam is NOT Hate Speech. pic.twitter.com/MNUBIWtXML

— Brigitte Gabriel (@ACTBrigitte) May 1, 2018

Check out the new "hate speech" prompt by @facebook. We are truly doomed. pic.twitter.com/cr5rjpgKCQ

— Gad Saad (@GadSaad) May 1, 2018

Hey @facebook the irony of you flagging my Fox hit on the importance of free speech as potentially “hate speech” is pretty rich. pic.twitter.com/bSlYqpk7kO

— Spencer Brown (@itsSpencerBrown) May 1, 2018

Facebook has yet to comment on the new feature, but it’s hard to believe how it could’ve been intentional, especially in the form in which it was released. Even if Facebook wanted to collect user feedback on posts which they thought contained hate speech, it’s unlikely that they’d ask for feedback on every single post. And the heads that said Test P1, P2 and P3 shows that the feature was still in development when it was rolled out for users. Controlling hate speech is a serious issue for Facebook — it was brought up repeatedly during Mark Zuckerberg’s Congressional testimony a few weeks ago — but Facebook’s efforts in the area clearly aren’t off to the best start.