LLMs have been producing some remarkable results over the last couple of years, but their detractors have said that they aren’t really all that impressive — given how LLMs work only by recursively predicting the next word in their answers, some people say that they’re little more than stochastic parrots, and don’t have real reasoning powers. But one of the most prominent voices in the field believes otherwise.

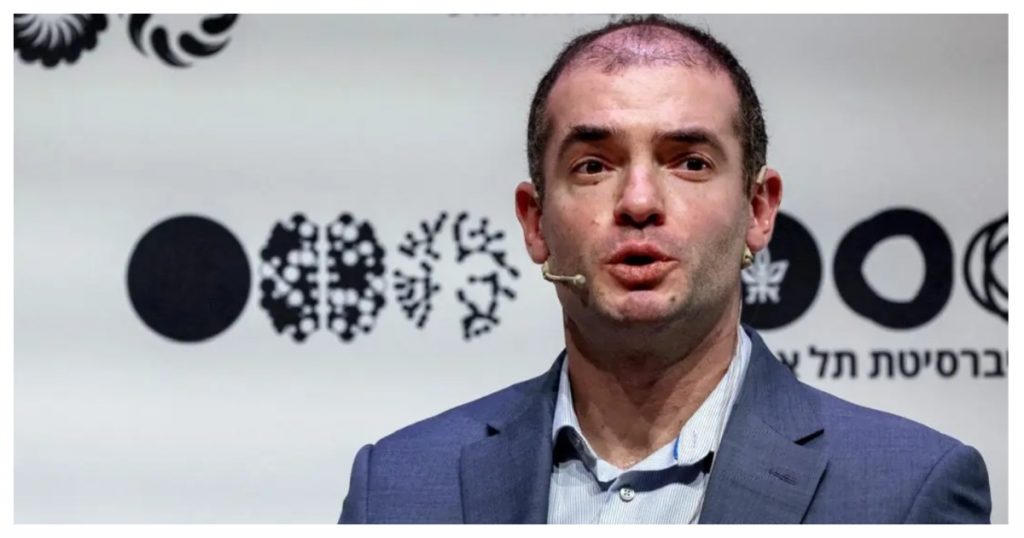

Former OpenAI Chief Scientist Ilya Sutskever believes that simply predicting the next few words can be evidence of a high level of reasonability ability. “(I will) give an analogy that will hopefully clarify why more accurate prediction of the next word leads to more understanding –real understanding,” he said in an interview.

“Let’s consider an example. Say you read a detective novel. It’s like a complicated plot, a storyline, different characters, lots of events. Mysteries, like clues, it’s unclear. Then, let’s say that at the last page of the book, the detective has gathered all the clues, gathered all the people, and saying, Okay, I’m going to reveal the identity of whoever committed the crime. And that person’s name is – now predict that word,” he said.

Ilya Sutskever seemed to be saying that predicting the next word in this case — the name of the criminal — wasn’t trivial. In order to predict the next word correctly, the LLM would need to be able to absorb all the data that was fed into it, understand relationships, pick up on small clues, and finally come to a conclusion about who the criminal might be. Sutskever seemed to be saying that this represented real reasoning power.

Sutskever has been bullish on the core technology behind LLMs for a while. More than a decade ago, he was saying that scaling up neural nets could lead to serious advances in generating intelligence. As more sophisticated hardware became available, neural nets became getting steadily more powerful, and advancements like the discovery of the transformer architecture helped create extremely powerful Large Language Models. And with people like NVIDIA CEO Jensen Huang saying that AI is growing 8x faster than even Moore’s Law, it appears that the ability of LLMs of simply predicting the next word could take us very far in our journey towards superintelligence.