ChatGPT has taken the world by storm — people are marveling at its AI-generated responses, it’s already being used across professions, it’s gotten Google to launch its a competitor, and it’s even being used to promote rap songs. But the seeds of the technology that underlies ChatGPT has a distinct Indian connection.

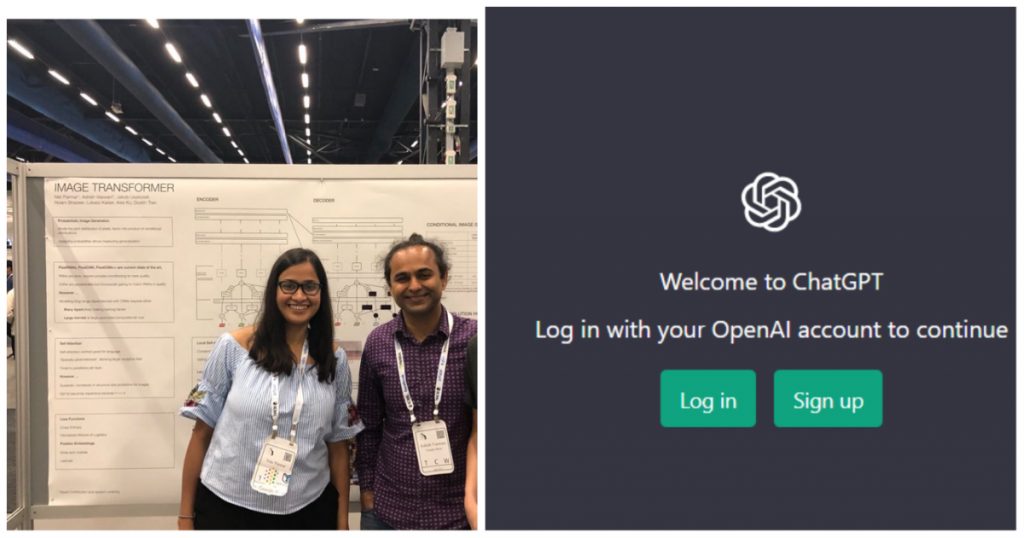

The seminal 2017 paper which had described the Transformer architecture which is used in ChatGPT had two Indian co-authors. The lead author of the paper, titled “Attention is all you need”, was Ashish Vaswani. Also among the 7 other authors was Niki Parmar. Both had completed their engineering degrees in India before moving to the US for higher studies.

The “Attention is all you need” paper, published in 2017, proposed the transformer architecture that’s used by programs like ChatGPT. GPT stands for Generatively Pre-Trained Transformer, where the “transformer” is the neural network that powers the AI responses. The architecture of the transformer as described in their 2017 paper became commonplace in the AI field since its publication, and became the basis of a number of AI applications, including ChatGPT.

Ashish Vaswani, who is credited as the lead author of the paper, had completed his engineering in Computer Science from BIT Mesra in 2002. In 2004, he had moved to the US for his Master’s degree from the University of Southern California. He then proceeded to finish his PhD from the same institute in 2014. In 2016, he joined Google Brain, Google’s deep learning artificial intelligence research team as a Staff Research Scientist. It was while at Google he co-wrote the “Attention is all you need” paper.

Another co-author on the paper was Niki Parmar. Parmar had completed a BE in Information Technology from Pune Institute of Computer Technology in 2012. She worked in Pune as a Software Engineer for a company known as PubMatic for a year, before moving to the University of Southern California for her Master’s degree. In 2015, she joined Google as a software engineer, and joined the Google Brain team in 2017.

When the paper was published, the authors hadn’t quite grasped the importance of their work, and the impact the transformers would have on the Artificial Intelligence field. But the AI community soon caught on to the new approach, and began using transformers in a variety of applications. The most famous use-case of a Transformer is ChatGPT, which uses a variant of the transformer known as a Generatively Pre-trained Transformer.

There’s no shortage of Indian CEOs in Silicon Valley — the CEOs of Microsoft, Google, IBM, Adobe and many others are all currently Indian — but there are plenty of Indian researchers who’re doing cutting edge research in Computer Science as well. And two homegrown Indians — who completed their undergraduate degrees in the country — have now had a small part to play in a breakthrough AI technology that looks poised to alter every aspect of our lives in the years to come.